Survive in Extremistan.

How to be a non-naive paranoid, when it is required to be paranoid.

Oliver López-Corona

Abstract

To survive repeated exposure to long-tailed risks including absorbent barriers (risk of ruin), it is necessary to act in a precautionary and anticipatory manner in what we might call non-naive “paranoia.” In this essay I discuss the suitability of this precautionary approach to survive in Extremistan (environments of uncertainty and complexity dominated by extreme events) identifying some key elements in my experience in a specific extreme environment: mountaineering; and then transfer it to the domain of national security, as the current epidemic of the coronavirus. I also discuss the concept of antifragility and finally I make some comments on the skills necessary to understand complexity, that could be cultivated at places as the Center for Naval Higher Studies (México).

Introduction

A couple of months ago studying different aspects of risk under contexts of complexity and long-queue processes, I came across a class from the Center for Homeland Defense and Security Naval Postgraduate School entitled “Living in extremistan” (https: // www. youtube.com/watch?v=zhWXl9qVj74) where Ted G. Lewis summarizes part of his work (Lewis, 2010; Lewis, et al. 2011) on the prevalence of infrequent but high-consequence events in our lives , infrastructure and even our national security.

Consider, for example, the repercussions of events such as the terrorist attacks of September 11, 2001. Until that moment, such an attack in the heart of the most militarily powerful nation on the planet was unthinkable. It was so unthinkable that there were no air safety protocols that now seem obviously necessary to us. In the economic sphere, we can think of the so-called “December Error”, which occurred after a period of apparent stability and bonanza, which we show that in reality it was an unstable stability that weakened the system and therefore was not an error of December but was generated much earlier, even showing some early warnings (López-Corona and Hernández, 2019). This “December error” soon spread throughout the world economic system as “The tequila effect” as a repercussion also of fragile conditions (Taleb, 2018).

Both events belong to a category of events known as Black Swans (Taleb, 2007) that refer to the fact that for hundreds or even thousands of years, only white swans existed for Western culture. The black swan (Cygnus atratus) is an endemic species of Australia that was not known in the West until 1697 with the English colonization of the mainland island. A Black Swan is an event with the following three attributes: (1) it is an outlier, since it is outside the range of regular expectations (inconsistent with the mean), because there is nothing in the past that can point to

convincing to its possibility (very small effect of long tails on its probability distribution); (2) leads to extreme impact; (3) Despite its rarity, human nature makes us invent explanations for its presence after the fact, making it explainable and predictable.

In general, Black Swans are events that are described by a different type of chance than we are used to. How are there different types of chance? Yes. The type of chance with which we are intellectually familiar, that is, the one we study in universities and even in postgraduate degrees, is that it comes from Gaussian processes (described by a Gaussian or Normal probability distribution). These types of random phenomena are dominated by average values, almost no event is far from them; the law of large numbers and other statistical properties are satisfied that make them relatively easy to analyze. On the other hand, there are the random events in real life, which we do not study in universities or postgraduate degrees, but whose consequences we experience every day. These events cannot be described by a Normal distribution, but by what is known as power laws, which, in a very simple way, describe what is known as scale invariance, that is, the phenomenon behaves in the same way as different scales or that there is no characteristic or privileged scale. In a phenomenon described by adistribution normal(bell-shaped) there is a dominant scale, that of the average. On the other hand, the phenomena described by a distribution of power laws or also known as long-tailed distributions, do not have a characteristic scale and are governed by extreme events (Black Swans).

Given the above, it is evident that it is essential to be able to differentiate when a risk belongs to normal processes or power laws of short tails, dominated by means, that is to say of Mediocristan; of those dominated by extreme events (long-tailed distributions), that is, from Extremistan. According to Lewis et al. (2010, 2011) the risks that belong to Mediocristan require a different approach than those that belong to Extremistan. The risks of Mediocristan require a prevention approach, building greater reaction capacities (eg greater number of firefighters), planning, coordination and collaboration. On the other hand, the risks of Extremistan require better immediate response capabilities, which implies, for example, greater intelligence and levels of redundancy.

The problem that I identify in this approach is that although it seems operational, there is a fundamental problem, because the law of large numbers is not fulfilled, that is, the mean does not converge or does so very slowly, in such a way that a phenomenon of masking occurs (Taleb, 2020) in which one may think that one is facing a Mediocristan process when in reality it is Extremistan. One might think that if it is a convergence problem, then a larger sample or a longer wait is enough, however it can be shown that for some long wave distributions such as Cauchy’s, the mean simply does not exist. This means that one can never really know that one is not in Extremistan.

Now, even when in Extremistan, it is different to take risks that have catastrophic results than those that do not. For example, the naive investor can invest all his money in volatile instruments hoping to make a good profit and get out before the system collapses and he will eventually do so because he belongs to Extremistan. However this amounts to playing Russian roulette for a large sum of money. No matter how big the stake, one should never participate in it if there is a risk of ruin (Taleb, 2007). The non-naive investor follows, for example, a strategy of the Bar together with a Kelly criterion (Taleb, 2012), investing for example 80% of his money in very safe instruments such as Treasury bonds and only 20% in volatile instruments. . In this way the investor is exposed to a positive Black Swan and in case of a negative one, he never goes bankrupt.

To identify the important theoretical elements in the case of catastrophic risks and to fix ideas, in the discussion section I will use my experience in an environment of high complexity, uncertainty and risks: mountaineering. I think that what is discussed in that context is valid and transferable to different issues concerning national security, combat, the fight against organized crime, or the current Coranovirus pandemic. In this way, in the conclusions section, I will try to delineate these connections.

Discussion

On December 29, 2000, after 23 years of exploration in the mountains by our expedition chief Carlos Rangel, we reached the top of Picacho del Diablo by a new route of more than 1000m high by a slope not ascended before. . All those who have ascended a mountain have a relatively good understanding of the visible risks such as the possibility of falling when climbing, the risk of a crevasse or going under an avalanche zone. But very few manage to internalize the invisible risks, either because they are events that occur very rarely and are therefore very little observed, or because they are products of second or third order effects. As Pit Schubert recounts in his book “Safety and Risk” originally published in German in 1994 and in which he brings together 25 years of research on alpine safety in Germany, during most of the history of mountaineering practically all the links in the chain of The safety devices were so fragile that fatalities occurred equally as a result of a safety nail giving way, a rope or a carabiner breaking. This was so common that practically no one was questioning the (silent) risk of strapping exclusively at the waist instead of also wearing the rather cumbersome chest harness. Such was the level of insecurity that falling first was simply not in mind in climbing culture. This is how my mentor Carlos Rangel taught me who was a classic scald with multiple first ascents. However, as the materials improved, the safety chain really began to serve its purpose and mountaineering became popular; the adventure gave way to the escalation of difficulty where falling first was very common. However, the culture of roping only at the waist prevailed, allowing infrequent but catastrophic events to be observed when, for example, climbing first and having the rope between the legs, the climber falls, causing the rope to turn his head due to the fact that the climber falls over. The tie point is exactly at the center of gravity, resulting in the climber’s head striking the wall (or even the ground if the route is starting).

Following the ideas of the logic of taking risks of Taleb (2001, 2007, 2010, 2012, 2018, 2020), these silent risks that go unnoticed due to the low frequency of occurrence, contain another very important lesson about the non-ergodicity of the real world . The concept of ergodicity is central in Physics, specifically in thermodynamics and establishes that under the right conditions, it is equivalent to calculate the mean value of a process over time, than to calculate the mean value of many equivalent systems at a specific time. (This set of equivalent systems is known as an assembly). In other words, the time average is equal to the assembly average. To clarify the importance of the point, we paraphrase an example from NN Taleb (2018). Let’s think of a group of 100 climbers who travel 1 day on a route with various objective risks such as cracks in the snow or falling rocks. If 4 of them die, we could say that there is a probability of dying, in a similar ascent, of 4% and if we repeat the experiment with another 100 climbers the next day, we would expect to have the same result. An important fact to highlight is that the death of climber number 15 does not affect the survival of climber number 16. Now, if instead of having an assembly of 100 climbers we take a single climber who travels the route for consecutive days, if the climber dies on day 20 will that affect the result on day 21? Sure, there is no system anymore. This is technically known as an absorbent barrier. The probability of the assembly in the real world where there are absorbent barriers is not equal to the temporal probability and therefore the principle of ergodicity is not fulfilled. In general terms, this non-ergodicity is interpreted as what is known as path dependence, that is, that the system has a history that affects possible future results. This dependence on the path leads us to a key conclusion, no matter how capable our climber is, if there is a risk of catastrophe, no matter how small, it will eventually present itself.

Now, a paradox arises from the previous paragraphs. Given that on the one hand the materials and security systems fail, and on the other hand we should not take catastrophic risks no matter how small; then a rational climber would use multiple safety systems to have redundancy, he would secure himself in all the steps where he perceives even the slightest risk of catastrophe (death), etc. But doing so would make your progress not only painful but very slow, increasing the temporary probability of risk. This was precisely the paradox we faced at Picacho del Diablo, the highest mountain in Baja California.

Avoiding catastrophic risks implies entering a hyper-paranoia mode, in the case of our climbing that would have implied carrying double or triple protection materials, which would have increased the weight to be loaded and would have decreased the speed of advance, with which in turn the time in the mountains would have increased (with the increase in the temporal probability of risk), which in the same way would have had as a secondary effect having to carry more food, increasing the weight, decreasing the speed and generating thereby a positive feedback. Following this logic of risk taking, we would have ended the need to set up multiple intermediate camps, include porters who would carry extra living, turning our exploration into what historically in mountaineering is known as a siege, essentially a military operation to conquer the mountain.

But even more interesting, if we had opted for that type of expedition we would have ended up with a very complex system (expedition) with multiple feedbacks and couplings, for which Perrow (2011) has proposed that normal accidents inevitably occur, which are systemic accidents; that is to say, without a single recognizable cause, but rather due to the interactions of the parts that make up the system, which occur infrequently, but with catastrophic consequences. Historical evidence shows that indeed heavy siege-type expeditions eventually generate tragedies such as Everest in 1996 where 12 people died, 2014 with 16 deaths or the avalanche due to an earthquake in 2015 where 22 mountaineers died.

Our strategy in El Picacho was based on a preventive, non-reactive hyper-paranoia. For a whole year we prepare ourselves to face all kinds of eventualities in the mountains: we climb during the day, at night or in the rain; instead of wearing climbing shoes we train with boots; We did routes at speed, we tried all kinds of self-rescue maneuvers and different combinations of equipment to select only the indispensable one; we build a physical condition as if we were going to make two ascents to the mountain one after the other; we made outings where we did not eat or drink water; we camped without a tent or sleeping bag, etc. In this way, through prevention, we push the absorbent barriers far enough so that during the expedition we could dispense with many protections (without constituting a significant risk); we calculated the food so that it would finish near the day of the summit and go down the mountain with little or no food; but with this we managed to be very light throughout the expedition, moving very fast and thus reducing the temporary probability of catastrophe.

As is often the case in real-world problems, non-naive risk-taking strategies arise from multi-objective optimization with constraints. We want to be hyper-paranoid about the existence of absorbent barriers, but at the same time we must be hyper-paranoid about temporary exposure; considering also that we are in a context of limited resources, information and time. The solution that my mentor and friend Carlos Rangel arrived at empirically after 23 years of trying to climb that mountain, is formally known in complex systems as criticality. The criticality hypothesis proposes that systems in a dynamic regime between order and randomness reach the highest level of computational capabilities (potential to solve problems) and achieve an optimal configuration between robustness (self-organization) and flexibility (emergency). . If a system is too organized, robust, it might not be able to react to new problems or changes in its environment and therefore, due to temporary exposure to a recurring risk, it will eventually reach an absorbent barrier, that is, it would perish. If, on the contrary, the system is too emergent, flexible, then it is not capable of retaining good solutions found to the problems it faces or to its current environment, and in the same way it would perish. It is in the balance between these two characteristics that the system has the best chance of surviving over time. If this is so, then we would expect complex systems to tend towards criticality, which occurs (Hidalgo, et.al. 2014; Ramírez-Carrillo, et.al. 2018 and their internal references). Furthermore, we know that in criticality the systems are also at their maximum complexity and Fisher information (Gershenson and Fernández, 2012; Wang, et.al. 2011), the latter related to stability and sustainability (Cabezas). Finally, in the same sense, we have recently proposed (López-Corona and Padilla, 2019) that systems that are at their maximum complexity and Fisher information (critical) are also anti-fragile.

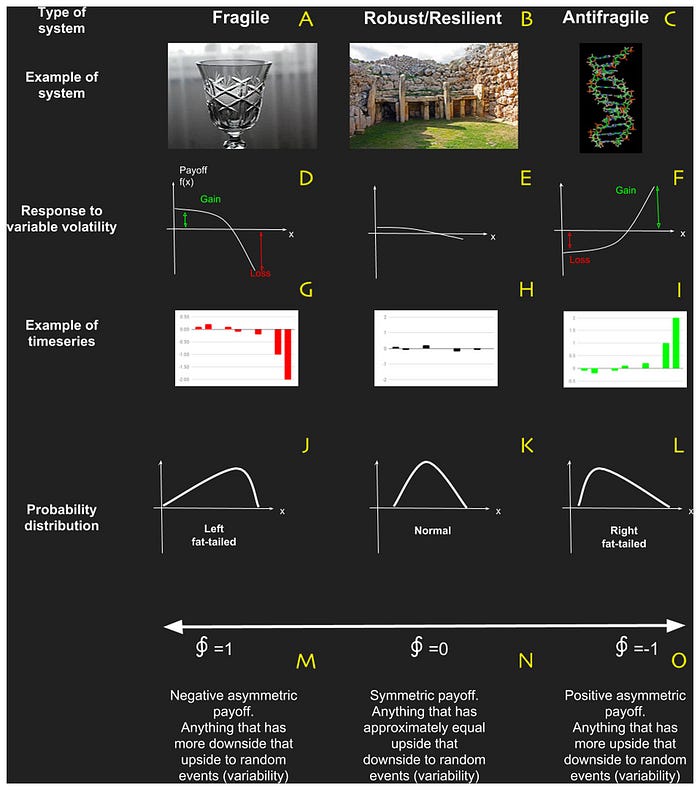

“Some things benefit from crashes; They thrive and grow when exposed to volatility, randomness, clutter, and stressors … they love adventure, risk, and uncertainty. There is no word for the exact opposite of brittle. Let’s call it anti-fragile ”Taleb (2012). This type of systems is characterized by being convex in their payment space, see Fig. 1, that is to say that when they are disturbed they win in their payment space.

Own creation based on Antifragil by NN Tableb used in “Complectere” (2019) by the author. A crystal glass is fragile because when faced with external volatility (children playing around it) it breaks. A construction like the one shown has remained standing despite changes in the climate, social systems, etc., it is robust. Life is the quintessential example of anti-fragility, life always blooms before chance, as this is a fundamental part of the evolutionary process.

(A–C) are examples of fragile, robust/resilient and antifragile systems respectively; (D–F) are examples of profile responses to perturbations; (J–L) are examples of typical probability distributions; and (M–O) are the characteristic values obtained with the metric based on complexity change. Taken from: https://doi.org/10.7717/peerj.8533/fig-4

Conclusions

We think that in order to survive risks of ruin in Extremistan it is necessary to learn how to be a paranoid, not naive, when it is necessary to be paranoid. We have discussed this in the context of mountaineering, but what we have identified there we believe applies, for example, to the current coronavirus epidemic that began in China and seems to be turning into a pandemic.

In this regard, the obligatory reference is the note by Joe Norman and collaborators (2020) that we summarize below. The new coronavirus that emerged from Wuhan China has been identified as a highly lethal and contagious strain and clearly we are facing an extreme process of long queues as a result of modern international connectivity, which in turn favors the spread of the disease in a non-invasive way. linear (Bar-Yam, 1997). As we have delineated in the essay, long-queue processes have special attributes, which make traditional risk management approaches inadequate. In particular, it is key to invoke the precautionary principle (non-naive) [3] that sets the conditions under which traditional cost-benefit analyzes should not be used and give way to a much more forceful action, for example in this case limit or stop international travel to reduce the connectivity of the process as much as possible. This undoubtedly entails economic, social and political costs that must be considered, but given that over time, exposure to these types of long-queue events with risk of ruin implies the certainty of eventual extinction, it turns out to be possibly the satisfactory solution. . Due to non-ergodicity, although there is a very high probability that humanity will survive the exposure of one of these pandemic events, over time, the probability of survival due to repetitive exposure to them tends to zero (temporary probability). The problem is that decision makers may feel reluctant to take “extreme” measures because typically important parameters of the epidemiology of a pathogen are often underestimated, such as the rate of spread, the basic reproductive number (R0) or the mortality rate. The authors of the note therefore highlight the need for a precautionary approach both for the current case and potential future pandemics, which should include, for example, but not only, restrictions on mobility patterns (international, national, regional and within cities) in the early stages of the outbreak, especially when little is known about the epidemiological parameters of the pathogen. And they end up concluding that while it is clear that there will be a cost associated with decreased mobility in the short term, not taking these types of measures could potentially cost us everything — if not in this specific event, then in a future one.

In their 2016 article, Albino et al. Argue that there are essentially three types of effects that occur in an opponent to our actions against him according to his level of fragility: (1) a fragile opponent will be damaged, possibly catastrophically; (2) a robust opponent will be largely unaffected, retaining much or all of his previous characteristics; (3) some opponents however actually gain strength from our attacks, a property that has recently been called anti-fragility. Traditional perspectives of military strategy implicitly assume fragility, limiting its validity and resulting in surprise, and assume a specific end state rather than a general system condition as a target.

This distinction could be crucial, for example, in the fight against drug trafficking. In a work exhibited at the Conference on Complex Systems (CCS) in 2017, by Ollin Langle-Chimal, a former student of mine from the Master’s in Data Science proposes a very interesting approach to the fight against drug trafficking using network theory. In his work he refers to how many strategies have been proposed to dismantle the operating networks of these criminal groups, the most common being the attempt to capture the cartel leaders. This strategy, he affirms, has not had a significantly positive impact reducing the influence of these groups or the violence throughout the country. In this sense, the complex network theory approach emerges as an alternative to understand the underlying dynamics of this non-trivial phenomenon. In her work, using a semi-automated text mining tool, she built a network of the characters from Anabel Hernández’s book “Los Señor del Narco” to analyze their topological and dynamic properties. In this way, possible attacks directed at the most relevant nodes (the Capos) of the network can be simulated using different measures of centrality, after which the robustness of this network can be measured, for example in terms of the size of the giant component, that is, , optimal percolation. The author also analyzed the resulting network communities after these attacks by looking at the exact number of deleted nodes required to dismantle this giant component. With this approach, it is possible not only to propose a minimum number of nodes to be removed from the network for decommissioning, but also if there are differences between the most influential nodes and those that are important to the network topology. These types of approaches could become relevant in terms of developing strategies to disable complex criminal structures.

In our own work on Ecosystemic Antifragility (Equihua, et. Al. 2020) we reviewed the existing literature and identified a set of characteristics associated with resilience and antifragility. In this way, a strategy against organized crime that considers the complexity of the phenomenon itself, could first work to weaken the crime network to be attacked and, once it is fragile, carry out forceful attacks not only on the main criminals but also on the relevant nodes for the crime. net.

It seems that the concept has been permeating in military narratives as shown in a 2017 article in Small War Journal, Nathan A. Jennings argues that the US military is embracing anti-fragility around its aid brigade policy. of the security force. In this sense, he comments that in a provisional way, General Milley has declared that “the level of uncertainty, the speed of instability and the potential for significant interstate conflict is greater than it has been since the end of the Cold War in 1989- 91 “. In this way, Jennings believes that by adopting an intentionally distributed Bar strategy, in addition to other force modernization programs, the US Army can diversify as a more anti-fragile enterprise in the face of endemic unpredictability. In the same article by Albino and collaborators (2016), which includes William G. Glenney IV, Chief of Naval Operations Strategic Studies Group, stated that the foundations of antifragility as a basis for military strategy are evolution, learning and adaptation. The authors further say that an antifragile system must be dynamic and, at the level of human institutions, can be intentionally constructed through exploitative mechanisms that give rise to antifragility.

In this way, we think that it would be very convenient for these concepts to be introduced into the postgraduate courses offered at CESNAV, considering, for example, the competencies that we have identified for the teaching of complexity (López-Corona, et.al. 2019) as they are :

- Systemic competence: being able to understand the root causes of complex problems, identifying, for example, the various interactions between causes and effects. Being able to understand the dynamics, cascading effects, as well as the feedback and inertia that inevitably appear in the approach of a problem.

- Ethical competence: because complexity is fully expressed in real world problems; Students must know the concepts and methods to contrast values and evidence on specific problems, putting into practice the principles of justice, equity and socio-environmental integrity of sustainable development.

- Anticipatory competence: requires students to be able to distinguish how a complex problem changes by analyzing it at different spatial or temporal scales. Similarly, they must understand the different ways of building scenarios (trend, strategic, etc.).

- Preventive competence: due to their complexity, real world problems tend to be perverse and, therefore, it is necessary to know how to evaluate them and understand when it is absolutely necessary to intervene and when it is better to allow the system to self-organize.

- Competition of the skin in the game: students must realize that, to behave ethically, when the solution of a complex problem points to decision-making, those who advocate the solution must never isolate themselves from responsibility, consequences of their analysis and the decisions made by them.

- Strategic competence: being able to design and implement collaborative interventions and strategies to address real-world problems. Improving your ability to act under scenarios of incomplete information and inconclusive evidence is especially important.

- False Narrative Detection Competence (or BS): Because complex problems can be analyzed from different perspectives and disciplines, they are an easy target for false narratives from charlatans or pseudoscientists. Therefore, it is important that students are able to detect false narratives and expose them. He who sees a fraud and does not shout — fraud! — It’s a fraud.

- “Do not teach birds to fly” competence: students must learn to avoid the typical academic practice of underestimating practical solutions and the empirical experience of practitioners and not believing that, because they can describe the mechanics of a soccer ball football, can qualify as the new “Messi”.

- Last but not least, collaborative competence: without it, the previous ones are useless, because to successfully implement these competencies it is essential that students become agents of change who create and facilitate both analysis and solutions of real world problems.

Note: reader should also see: https://www.nature.com/articles/s41567-020-0921-x

References

Ahmad, N., Derrible, S., Eason, T., & Cabezas, H. (2016). Using Fisher information to track stability in multivariate systems. Royal Society open science, 3(11), 160582.

Albino, DK, Friedman, K., Bar-Yam, Y., Glenney, IV, & William, G. (2016). Military strategy in a complex world. arXiv preprint arXiv:1602.05670.

Y. Bar-Yam, “Dynamics of complex systems,” 1997.

Equihua Zamora M, Espinosa M, Gershenson C, López-Corona O, Munguia M, Pérez-Maqueo O, Ramírez-Carrillo E. 2020. Ecosystem antifragility: Beyond integrity and resilience. PeerJ: https://peerj.com/articles/8533/

Gershenson, C., & Fernández, N. (2012). Complexity and information: Measuring emergence, self‐organization, and homeostasis at multiple scales. Complexity, 18(2), 29–44.

Jennings, N. (2017) Security Force Assistance Brigades: The US Army Embraces Antifragility. Small Wars Journal https://smallwarsjournal.com/jrnl/art/security-force-assistance-brigades-the-us-army-embraces-antifragility

Lewis, TG, Mackin, TJ, & Darken, R. (2011). Critical infrastructure as complex emergent systems. International Journal of Cyber Warfare and Terrorism (IJCWT), 1(1), 1–12.

Lewis, TG (2010). Cause-and-Effect or Fooled by Randomness?. Homeland Security Affairs, 6(1).

López-Corona O. and Hernández G. (2019). What does theoretical Physics tell us about Mexico’s December Error crisis. RESEARCHERS.ONE, https://www.researchers.one/article/2019-01-3.

López-Corona O. and Padilla P. (2019). Fisher Information as unifying concept for Criticality and Antifragility, a primer hypothesis. RESEARCHERS.ONE, https://www.researchers.one/article/2019-11-16

López-Corona O and Elvia Ramírez-Carrillo and César Cossio-Guerrero and Cecilia González-González and Sandra López-Grether and Linda Martínez and Mireya Osorio-Palacios and Gustavo Tovar-Bustamante (2019). How to teach complexity? Do it by facing complex problems, a case of study with weather data in natural protected areas in Mexico. RESEARCHERS.ONE, https://www.researchers.one/article/2019-01-2.

Norman, J., Bar-Yam, Y., & Taleb, NN Systemic Risk of Pandemic via Novel Pathogens–Coronavirus: A Note. Liga

Perrow, C. (2011). Normal accidents: Living with high risk technologies-Updated edition. Princeton university press.

Taleb, NN Fooled by Randomness: The Hidden Role of Chance in Life and in the Markets. New York: Random House. 2001. ISBN 978–0–8129–7521–5. Second ed., 2005. ISBN 1–58799–190-X.

Taleb, NN (2007). The Black Swan: The Impact of the Highly Improbable. New York: Random House and Penguin Books. ISBN 978–1–4000–6351–2 https://archive.org/details/blackswanimpacto00tale |url= missing title (help). Expanded 2nd ed, 2010 ISBN 978–0812973815.

Taleb, NN (2010). The Bed of Procrustes: Philosophical and Practical Aphorisms. New York: Random House. ISBN 978–1–4000–6997–2 https://archive.org/details/bedofprocrustesp00tale |url= missing title (help). Expanded 2nd ed, 2016 ISBN 978–0812982404.

Taleb, NN (2012). Antifragile: Things That Gain from Disorder. New York: Random House. ISBN 978–1–4000–6782–4 https://archive.org/details/isbn_9781400067824 |url= missing title (help).

Taleb,NN, R. Read, R. Douady, J. Norman, and Y. Bar-Yam. (2014) “The precautionary principle (with application to the genetic modification of organisms),” arXiv preprint arXiv:1410.5787.

Taleb, NN. (2018). Skin in the Game: Hidden Asymmetries in Daily Life. New York: Random House.. ISBN 978–0–4252–8462–9. (This book was not published with the original bundling of the Incerto series.)

Taleb, NN (2018). Why Did The Crisis of 2008 Happen?. RESEARCHERS.ONE, https://www.researchers.one/article/2018-09-16.

Taleb, NN (2020). Statistical Consequences of Fat Tails: Real World Preasymptotics, Epistemology, and Applications. RESEARCHERS.ONE, https://www.researchers.one/article/2020-01-21.

Wang, XR, Lizier, JT, & Prokopenko, M. (2011). Fisher information at the edge of chaos in random Boolean networks. Artificial life, 17(4), 315–329.